RBlocks - Block Storage Specification (Draft)

1. Introduction

1.1 Purpose of the system

In the RChain architecture, blocks are effectively unlimited in size because this allows validators to produce blocks with large payloads and larger fee structures. In a proof-of-stake system, allowing large blocks creates an incentive for validators to acquire user traffic, and therefore transactions. This represents a significant contrast to proof-of-work systems, where miners have an incentive to mine blocks whether transactions exist to fill them, or not.

In order to handle large block sizes, RChain's node software must segregate block storage into several parts. This document describes how the complete block data is stored on disk, how it can be accessed from within the node, and the semantics of the data structure and storage employed. Storage of the directed acyclic graph (DAG) is not covered here. (See <TBD>)

The node writes complete block data to disk, in order to minimize network synchronization across node restarts. If a node is down for extended periods, it will be forced to perform synchronization with others nodes in order to update both its RBlocks data store, the BlockDAG, and the LatestMessages table for validators. The algorithm by which this update occurs and the network protocols enabling it are beyond the scope of this document. (See <TBD>)

1.1.1 Use Cases

The most significant and obvious use case for RBlocks is retrieval of the raw block content for Casper and for Rholang's Tuplespace. Casper needs to be able to determine whether two DAG tips conflict with each other, or not. In the case where they do not conflict, Casper needs to merge the tips. In the case where they do conflict, it must select between them. As Casper updates its notion of which transactions are preferred, it must provide this data to the Rholang interpreter's replay functionality to allow the Tuplespace to update its internal state according to the selected branch of the BlockDAG.

1.2 Design goals

The design goals of the block storage include:

- Looking up a block by its hash

- Inserting a new blockhash-block pair

- Storing the block bodies with little disk space overhead

- Storing tens of millions of blocks without significant performance hit

- Avoiding full database corruption on system crashes

1.3 Definitions, acronyms, and abbreviations

1.4 References

2. Current software architecture

The existing API for block storage is represented by BlockStore trait.

trait BlockStore[F[_]] {

def put(blockHash: BlockHash, blockMessage: BlockMessage): F[Unit]

def get(blockHash: BlockHash): F[Option[BlockMessage]]

def find(p: BlockHash => Boolean): F[Seq[(BlockHash, BlockMessage)]]

def put(f: => (BlockHash, BlockMessage)): F[Unit]

def apply(blockHash: BlockHash)(implicit applicativeF: Applicative[F]): F[BlockMessage]

def contains(blockHash: BlockHash)(implicit applicativeF: Applicative[F]): F[Boolean]

// Should be deleted after designing the new block store implementation

def asMap(): F[Map[BlockHash, BlockMessage]]

def clear(): F[Unit]

def close(): F[Unit]

}

Its only implementation is LMDBBlockStore which plainly stores blockhash-blockbody pairs in LMDB.

The proposed implementation will reuse the existing trait for API, but asMap() method should be removed from it as it would be harmful to use such a method once the number of blocks will surpass several thousands.

3. Proposed software architecture

3.1 Overview

The proposed implementation LMDBIndexBlockStore of the existing API is a combination of an index backed up LMDB and a file containing an arbitrarily ordered sequence of block bodies.

final class LMDBIndexBlockStore[F[_]] private (

lock: MVar[F, Unit],

indexEnv: Env[ByteBuffer],

indexBlockOffset: Dbi[ByteBuffer],

blockBodiesFileResource: Resource[F, RandomAccessFile]

)(implicit syncF: Sync[F])

extends BlockStore[F] {

private[this] def withReadTxn[R](f: Txn[ByteBuffer] => R): F[R]

def get(blockHash: BlockHash): F[Option[BlockMessage]] =

for {

_ <- lock.take

result <- blockBodiesFileResource.use { f =>

withReadTxn { txn =>

Option(indexBlockOffset.get(txn, blockHash.toDirectByteBuffer))

.map(_.getLong)

.map { offset =>

f.seek(offset)

val size = f.readInt()

val bytes = Array.fill[Byte](size)(0)

f.readFully(bytes)

BlockMessage.parseFrom(ByteString.copyFrom(bytes).newCodedInput())

}

}

}

_ <- lock.put(())

} yield result

def find(p: BlockHash => Boolean): F[Seq[(BlockHash, BlockMessage)]]

def put(f: => (BlockHash, BlockMessage)): F[Unit]

def clear(): F[Unit]

def close(): F[Unit]

}

object LMDBIndexBlockStore {

final case class Config(

indexPath: Path,

indexMapSize: Long,

blockBodiesPath: Path,

indexMaxDbs: Int = 1,

indexMaxReaders: Int = 126,

indexNoTls: Boolean = true

)

def apply[F[_]: Sync: Concurrent: Monad](config: Config): F[LMDBIndexBlockStore[F]]

}

An instance of LMDBIndexBlockStore can be created by invoking LMDBIndexBlockStore.apply provided by the LMDBIndexBlockStore.Config configuration containing the path and parameters of LMDB index and path to the block bodies file. It invokes the LMDBIndexBlockStore constructor with the following arguments:

lock- An instance ofcats.effect.concurrent.MVarwhich is used as a mutex for synchronizationindexEnv- LMDB environment created by using the provided LMDB configurationindexBlockOffset- LMDB database interface created by using the provided LMDB configurationblockBodiesFileResource- A random access file (which is derived from the provided configuration) where the block bodies are stored wrapped intocats.effect.Resourceto capture the effectul allocation of the resource

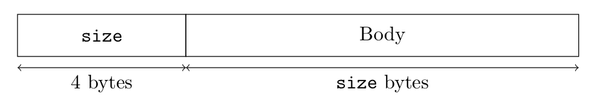

An example implementation of get method can be seen in the code block above. It acquires lock and accesses blockBodiesFileResource's internal RandomAccessFile. After that, get looks up the offset of the corresponding block body and seeks to this position in block bodies file. The first 4 bytes at this position are an integer size representing the length of the body. Reading the following size bytes and parsing them into BlockMessage results in the requested block.

Other operations defined in BlockStore API can be implemented in the similar fashion.

3.2 Software/hardware mapping

3.2.1 Memory allocation

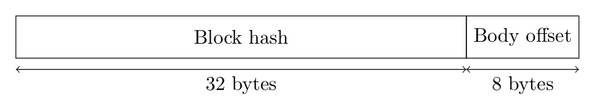

LMDB index is the only data structure which contributes to memory usage as LMDB uses memory-mapping. One key-value pair in LMDB index consists out of 32 bytes of block hash and 8 bytes of file offset. Hence, 40 bytes are needed to store one key-value pair and 1 GiB of RAM is able to hold almost 27M pairs. However, this does not include the overhead by LMDB for storing B+ tree layout which according to several benchmarks is quite high and can reach ~70%. After taking this in consideration, the approximate amount of pairs 1 GiB can handle is ~16M.

3.2.2 Disk Memory

The calculations for LMDB index memory allocation are also true for its disk space usage (including the overhead).

Block bodies are variable in size and it is impossible to calculate how much disk space they use up and the proposed solution's overhead is only 4 bytes (length of the body in bytes) per block body which add only 1 GiB of overhead every ~270M blocks.

3.3 Persistent data management

Storing blockhash-bodyoffset pairs is delegated to LMDB. A single pair's structure is shown on the following figure.

Block bodies are stored in the specified file and written in arbitrary order. A header containing the size of the body preceded the actual content of the body as shown on the following figure.

3.4 Global software control

Gets, finds and puts require acquiring the lock and hence mutually exclusive.

3.5 Boundary conditions

- On node startup LMDB is initialized using the provided configuration and the block body file is opened

- On node shutdown shutdowns LMDB and closes the block body file

- On node error LMDB will not have to do any additional actions as it is system crash resistant. The block body storage can have some partially-written data in the end of the file, but index will not point to this corrupted part of the file as index is updated after the successful write to the block body file.