Strategies for improving integration testing

Background

Many members of the development team report frustration with the workflow experience related to integration testing.

We've had two workflows for integration testing to date:

Pros and cons shown below are gleaned from discord and other conversations. They are likely incomplete. You are welcome to add to the list. Please don't remove anything. If you disagree, please add a comment.

| Old system | Current system | |

|---|---|---|

| Description |

|

|

| Pro |

|

|

| Con |

|

|

Discussion

Please add your thoughts, experiences, and recommendations to this section. Select edit to add content.

Some content pulled from discussion in discord and notes from the RSpace/RNode standup on July 13.

Your thoughts and experiences

| Your name | Your thoughts and experiences |

|---|---|

| Michael Stay (Unlicensed) | Current system assumes devs watch to see if PR breaks Point of not requiring integration tests before merge was to preserve sanity I don't support the requirement that integration tests be run locally prior to commit. This requirement only makes sense if the testing can be automated.

It is not necessary to run integration tests before submitting; that's the whole point of making automated integration tests run after submission time. It IS your responsibility to make sure that your PR doesn't break the integration tests. If you choose to run them locally or choose only to merge PRs in the morning, you're less likely to have trouble. It's not OK to merge a PR late in the day and disappear for the weekend. At the moment, the project is small enough that it's possible to run integration tests before submission. Jeremy has indicated that with a few days free, he could make it so that integration tests run only after a PR has been approved, so early changes won't trigger a CI run. However, once testnet launches, we'll have test, staging, and production servers running this software live; eventually we'll have integration with other services like exchanges or cryptofex tools, and so on. It will rapidly become impossible to run all integration tests before submitting, so we have to start building up behaviors, processes, and protocols for dealing with the fact that unit tests will be the only way to detect errors before merging a PR. |

| Henry Till | Seems like some issues that came up this week could have been prevented with better local tests |

| Henry Till | |

| Michael Stay (Unlicensed) | An on-call system for monitoring CI/CD (shared by the dev team) is not desirable. Although, it may be coming in the future. |

| Kelly Foster | It's unclear that moving from the old system to current system that we've realized any practical time-savings It does appear that the current system is more frustrating (noisy, triage, emergency) |

| Medha Parlikar (Unlicensed) | I"m really happy that the integration tests are doing their job! it's GREAT I would prefer that folks run the integration tests locally for large changes. |

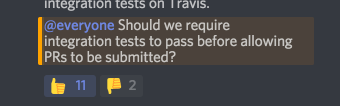

Poll run in discord on July 9 by Michael Stay (Unlicensed) |

Recommendations for changes

| Your name | Change recommendation | +/- (feedback from others) |

|---|---|---|

| Michael Stay (Unlicensed) and Henry Till | Add better and shorter unit tests (like a phase 1 of unit tests). Improve unit test suite | |

| Jeremy Busk | Devs can run integration tests locally for large commits | |

| Henry Till | Preference for using the old system (merges blocked until integration tests pass) |

|

| Jeremy Busk | Trigger integration tests after a PR has been reviewed and approved, rather than current system at time of merge. Estimates 3-4 days of work to implement. | |

| Łukasz Gołębiewski (Unlicensed) | One side note regarding integration tests - scaling is an option, but for a price https://circleci.com/pricing/ | |

| Sebastian Bach | If we continue with expectation to run integration tests locally, need a workshop on how to do this |